Introducing Maple Proxy – the Maple AI API that brings encrypted LLMs to your OpenAI apps

How many apps do you come across on a daily basis that are infused with AI? Calorie trackers, CRMs, study companions, relationship apps 👀. Most of them use ChatGPT on the backend, meaning they share your data with OpenAI. All of it. It doesn't need to be this way.

Today we’re proud to launch Maple Proxy, a lightweight bridge that lets you call Maple’s end‑to‑end‑encrypted large language models (LLMs) with any OpenAI‑compatible client without changing a line of code.

Your existing OpenAI libraries keep working.

Your data stays inside a hardware‑isolated enclave.

The Maple Proxy is great for:

- People who run AI tools on their computer that call out to OpenAI

- Developers who ship apps that call out to OpenAI

Using Jan to run the largest DeepSeek R1 671B with full encryption through Maple AI

If you're stoked on the idea already, feel free to jump straight to our Maple Proxy documentation and get started.

What ships in the box?

Maple runs inference inside a Trusted Execution Environment (TEE). That gives you:

- End‑to‑end encryption – prompts and responses never leave the enclave, not even for us.

- Cryptographic attestation – you can verify you’re talking to genuine secure hardware.

- Zero Data Retention – no logs, no training data, nothing stored in the clear.

All of that security used to mean extra plumbing in your code (handshakes, key exchanges, attestation checks). Maple Proxy does the heavy lifting for you, exposing a drop‑in OpenAI‑style endpoint that speaks the same JSON schema you already know.

Ready‑to‑Use Models (pay‑as‑you‑go)

| Model | Typical Use‑Case | Price* |

|---|---|---|

| llama3‑3‑70b | General reasoning, daily tasks | $4 in / out |

| gpt‑oss‑120b | Creative chat, structured data | $4 in / out |

| deepseek‑r1‑0528 | Advanced math, research, coding | $4 in / out | kimi-k2.5 | Complex agentic workflows, multi-step coding, math, image analysis | $4 input / $4 output |

| qwen3-vl-30b | Image and video analysis, screenshot-to-code, OCR, GUI automation | $4 input / $4 output |

| gemma‑3‑27b‑it‑fp8‑dynamic | Fast image analysis | $10 in / out |

*Prices are per million tokens. API usage draws from the paid subscription plan credits first. If additional credits are needed, they can be purchased in increments starting at $10.

Real‑World Ways to Use Maple Proxy

A calorie‑counting mobile app (for everyday users)

A developer can replace the public OpenAI calls that power “suggest healthy meals” with a call to Maple Proxy. The app sends the user’s food log to the private LLM, receives a tailored meal plan, and the user’s dietary data never leaves the secure enclave. The experience feels the same, but privacy is secured.

Internal knowledge‑base search for a tech startup (for developers)

Your team’s internal documentation lives in a private repository. By routing the retrieval‑augmented‑generation queries through Maple Proxy, the LLM can answer “How do we deploy the new microservice?” without ever exposing the confidential architecture details to an external provider.

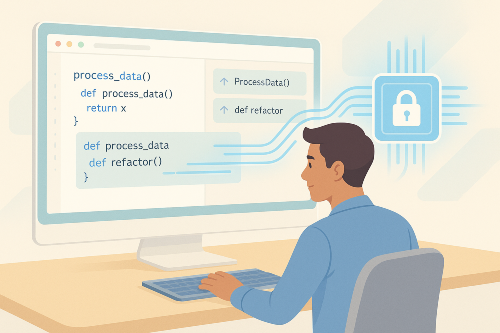

Coding‑assistant plug‑in for an IDE (for developers)

Take your coding environment that uses the OpenAI API, but point the client at http://localhost:8080/v1. The assistant can suggest code snippets, refactor functions, or explain errors while keeping proprietary code snippets inside a hardware‑isolated enclave. No additional SDKs or libraries are required—just change the base URL. Whether you're building production code or simply vibe coding your next idea, Maple Proxy keeps you secure.

These examples illustrate how the same simple “change the endpoint” step unlocks private, secure AI for any workflow, from consumer‑facing apps to internal tooling.

How to Get Started

1. Desktop App – Fastest for Local Development

- Download the Maple desktop app → trymaple.ai/downloads

- Sign up / upgrade to Pro, Team, or Max (plans start at $20 / month)

- Open the Local Proxy tab and click “Start Proxy.”

The app automatically creates an API key, starts a proxy on localhost:8080 (or a port you choose), and handles all attestation and encryption behind the scenes. From this point on you can point any OpenAI client to http://localhost:8080/v1 and everything just works.

2. Stand‑alone Docker Image – Production‑Ready

Pull the image

docker pull ghcr.io/opensecretcloud/maple-proxy:latest

Run it (replace YOUR_MAPLE_API_KEY with a key from your Maple dashboard)

docker run -p 8080:8080 \

-e MAPLE_BACKEND_URL=https://enclave.trymaple.ai \

-e MAPLE_API_KEY=YOUR_MAPLE_API_KEY \

ghcr.io/opensecretcloud/maple-proxy:latest

You now have a secure, OpenAI‑compatible endpoint at http://localhost:8080/v1. A ready‑to‑use docker‑compose template is included in the repository for longer‑running services.

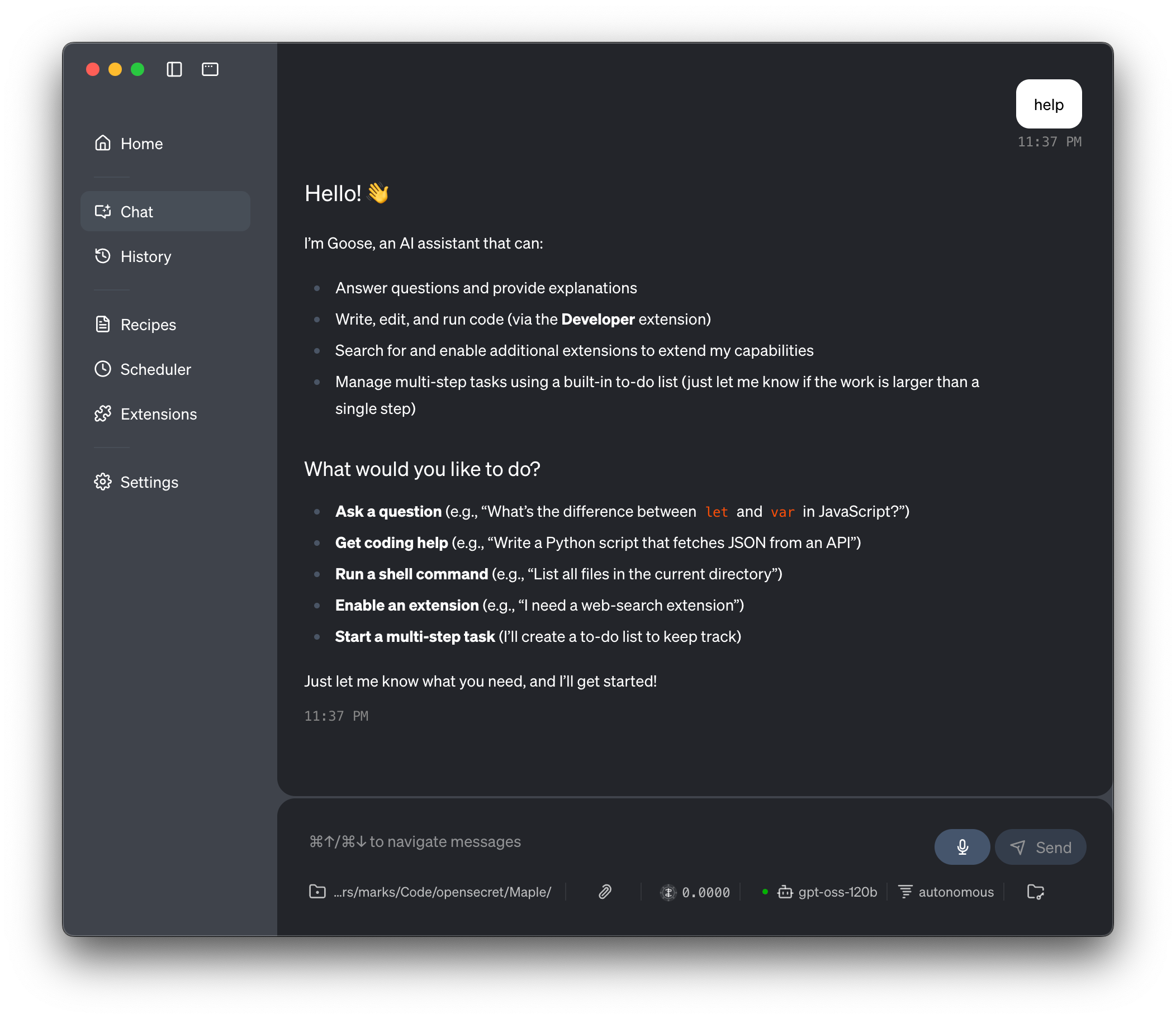

Works With Any OpenAI‑Compatible Tool

If a library lets you set a base_url or api_base, it works with Maple Proxy. That includes LangChain, LlamaIndex, Amp, Open Interpreter, Goose, Jan, and virtually any other OpenAI‑compatible SDK. No code changes beyond the endpoint URL are needed.

Need More Detail?

- Technical write‑up – Using the Maple Proxy

- Full API reference – https://github.com/opensecretcloud/maple-proxy

- Discord community – real‑time community help and feature discussions

Start Building with Private AI – Today

- Download the desktop app or pull the Docker image.

- Upgrade to a Pro/Team/Max plan (starting at $20 / month).

- Point any OpenAI client to

http://localhost:8080/v1.

Let's start securing all the apps together.