Using AI Safely in Your Therapy Practice: A Comprehensive Guide

“Confidentiality allows clients to be vulnerable and honest without fear of their information being misused.” — Kristin M. Papa, LCSW (https://www.livingopenhearted.com/post/chatgpt-therapist)

Artificial‑intelligence tools are suddenly everywhere, promising to draft progress notes, brainstorm treatment plans, and handle the administrative work that eats into a clinician’s evenings. But while the productivity upside is obvious, the privacy risks are just as clear: a single copy‑paste of a client’s trauma narrative into the wrong chatbot could violate regulations, break ethical guidelines, and shatter trust.

This guide walks through how therapists and counselors can tap into the power of AI without compromising confidentiality. You’ll learn:

- Why security matters more than ever when using AI in mental‑health care

- Common pitfalls with popular, public AI tools

- How Maple’s end‑to‑end‑encrypted approach keeps clinical data private by design

- A step‑by‑step workflow you can adopt today—complete with consent language and privacy checklists

Why Security & Confidentiality Must Lead Every AI Conversation

Therapeutic rapport is built on trust. Clients disclose information they would never share elsewhere—often details that, if exposed, could harm careers, relationships, or personal safety. That’s why every major professional body (ACA, APA, NASW) reminds clinicians that technology must never jeopardize client confidentiality.

Unfortunately, clinicians are already taking risks. A recent Cisco survey found that 48 percent of organizations have input non‑public company information into public AI apps. (1) In healthcare, that kind of data leakage isn’t just embarrassing; it can trigger regulatory penalties and malpractice claims.

The Stakes for Therapists

- Legal exposure – Unsecured data can be considered a breach under privacy laws.

- Ethical violations – Codes of ethics mandate that clinicians protect client records and obtain informed consent before using any third‑party service.

- Client harm – Loss of privacy can re-traumatize clients or deter them from future treatment.

If we want the efficiency of AI, we must ensure the technology meets the same confidentiality bar as our locked filing cabinets.

What Goes Wrong with Typical AI Tools

Most headline‑grabbing AI apps rely on cloud servers that record interactions, use them to further train large language models, or hand data to subcontractors. Even if the platform offers an “incognito” mode or Zero Data Retention policy (ZDR), clinicians have no cryptographic proof that the text can’t be read later.

Common Pitfalls

When using AI assistants in therapy, it's essential to be aware of potential pitfalls that can compromise client confidentiality. Here are some common pitfalls to watch out for:

- Server-side storage of prompts: Client data may sit unencrypted on vendor servers for days or months, posing a significant risk to client confidentiality.

- Model-training reuse: Input text can be ingested to improve models, risking accidental disclosure in future completions. This means that sensitive client information could potentially be exposed, even if it's not intentionally shared.

- Opaque security claims: Be wary of vendors that claim to "take privacy seriously" without providing verifiable encryption methods. A clear and transparent security protocol is crucial to ensuring client data remains protected.

A fourth—and often unspoken—pitfall also deserves attention:

Censorship & Account Flagging

Automated content filters can misread sensitive clinical topics (self-harm, trauma, abuse) as policy violations, freezing your account or wiping a transcript mid-session. Language policing like this chills client disclosure and handicaps therapy-grade AI work.

By understanding these potential pitfalls, you can take steps to mitigate risks and ensure that your use of AI assistants in therapy prioritizes client confidentiality and security.

Bottom line: a therapist cannot ethically paste a raw session transcript into a generic chatbot and hope for the best.

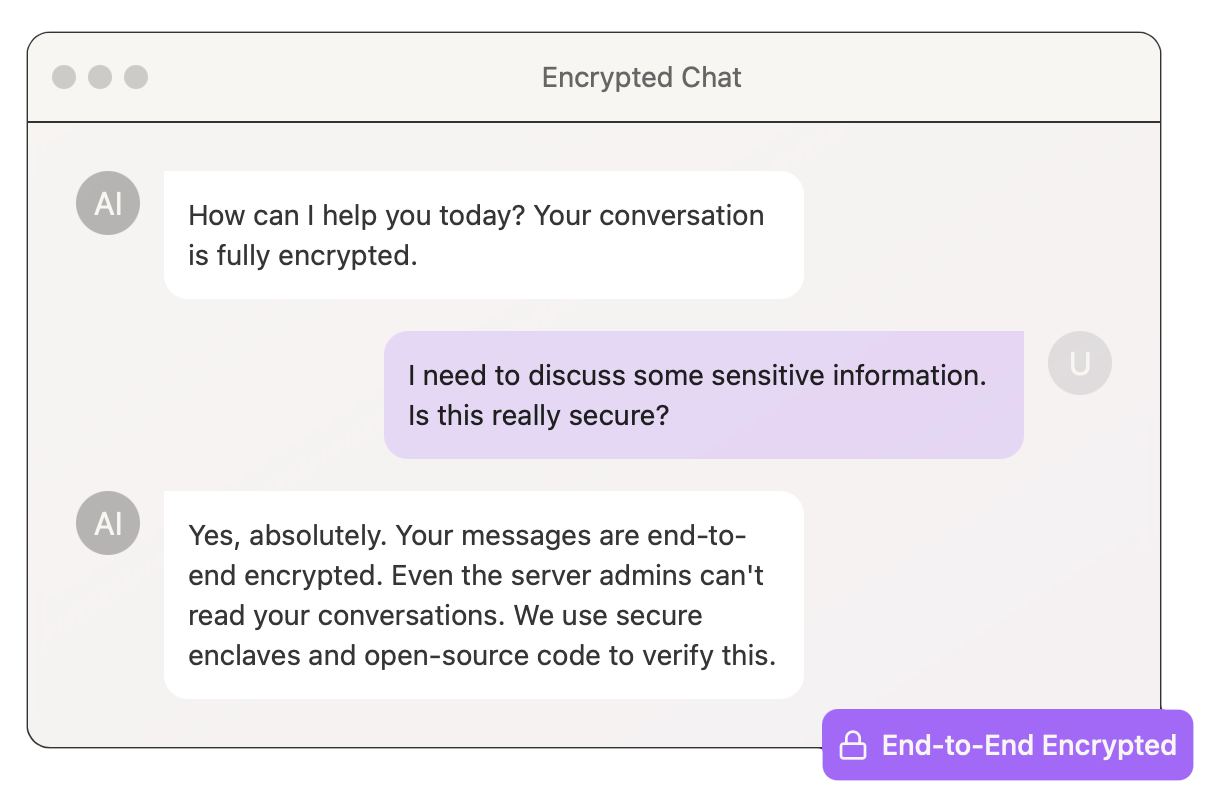

Maple: End‑to‑End Encryption Built for Clinical Workflows

Maple was engineered for professionals who handle sensitive data every day—from therapists and counselors to lawyers and accountants. The core promise is simple: your conversations are encrypted on your device, in transit, and in memory on our servers—so private that even Maple’s engineers can’t read them.

Key safeguards include:

- Confidential‑computing servers – Maple runs inside secure enclaves that prove, cryptographically, that only the open‑source code you can inspect is running.

- Zero data retention – Conversations are not kept for model training. Your text never trains anyone else’s AI.

- HIPAA‑aware design – Maple's feature set is intended to support compliance, but it is the responsibility of our customers to ensure they meet all relevant regulatory requirements.

- Local‑first encryption keys – Encryption keys are generated client‑side; Maple never sees or stores them in plaintext.

Because privacy is handled at the architecture level—not via policy—therapists can safely harness AI functionality without rewriting their risk‑management plan every time the terms of service change.

📣 Mental Health Awareness Month Bonus 🎁

Sign up for a Maple Starter or Pro plan using your clinic or practice email address anytime in May and get an extra month—free!

How to claim:

- Create (or upgrade to) a Starter or Pro subscription using your professional email.

- In the Maple app, tap Account → Contact Us and send a quick note with the subject line “Free Month”.

- We’ll verify and credit your account with an additional month.

Because private, encrypted AI should be as affordable as it is secure. 💚

A Secure, Ethical AI Workflow for Therapists

The following workflow balances efficiency with professional ethics. Adapt it to your practice setting.

To ensure compliance and maintain client confidentiality, follow these steps when using a privacy-preserving AI assistant:

Step 1: Obtain Informed Consent

- Action: Explain to clients that you use a privacy-preserving AI assistant to help with note summarization or resource generation. Clarify that no identifiable data leaves your encrypted workspace.

- Privacy & Ethics Check: ACA tech guidelines recommend explicit consent before using digital tools with client data. (See recommendations for practicing counselors for more information.)

Step 2: Use Client Initials or Codes

- Action: When feeding session bullets into Maple, reference clients by initials or a short code rather than full names.

- Privacy & Ethics Check: This adds an extra layer of protection, even though the AI assistant encrypts everything.

Step 3: Paste or Dictate Concise Bullets

- Action: Immediately after a session, drop key points (symptoms, themes, homework) by typing or using a microphone into the AI assistant. Ask: "Summarize these in DAP format."

- Privacy & Ethics Check: The Maple AI assistant processes text inside an encrypted enclave; no plaintext ever leaves that boundary.

Step 4: Review and Refine

- Action: Skim Maple's summary; edit clinical language, add subjective impressions. You can also use Maple to generate treatment plans, create client resources, or develop personalized coping strategies.

- Privacy & Ethics Check: The clinician maintains authorship and ensures accuracy (avoids over-reliance on AI). For example, you can ask Maple to "Generate a treatment plan for a client with anxiety" or "Create a list of local resources for clients struggling with substance abuse."

Step 5: Store Final Note Securely

- Action: Copy the refined note into your EHR or secure file. Delete the chat if practice policy requires.

- Privacy & Ethics Check: This maintains record-keeping compliance; Maple never retains an unencrypted copy.

Step 6: Log Usage in Supervision

- Action: If you're under supervision or part of a group practice, document that Maple was used compliantly.

- Privacy & Ethics Check: This demonstrates transparency and adherence to agency policy.

Tip: You can also ask Maple to generate client homework (“Provide a 10‑minute grounding exercise”). Because Maple doesn’t keep these outputs in plain text, you can copy the text to share as PDFs or printouts with confidence.

Best Practices & Risk‑Mitigation Checklist

✅ Stay client‑centered. Use Maple to enhance—not replace—clinical judgment. Always review AI output before adding it to the record.

✅ Keep identifiers minimal. Even though Maple encrypts data, using initials or coded references reduces exposure further.

✅ Document consent. Note in the client file (and optionally in Maple chat) that the client agreed to AI‑assisted note summarization.

✅ Update your policies. Add Maple (with its HIPAA stance) to your technology and security policies so staff know it’s approved.

✅ Monitor & review. Periodically audit Maple outputs for accuracy and appropriateness; correct any drift early.

Frequently Asked Questions

Q: Is Maple HIPAA‑compliant?

Maple’s architecture (end‑to‑end encryption, confidential computing) is built with HIPAA’s security rules in mind. It goes beyond the security approach of other tools that are officially HIPAA compliant, but Maple has not undergone certification yet.

Q: Does Maple train its AI on my client data?

No. Your encrypted text is never used to train models. Maple uses foundation models that are kept separate from your content.

Q: What if my board audits me?

Maple's code is open source and can be verified by anyone. Because your keys never leave your device unencrypted, no one—not even Maple—can decrypt your chats. Maple can supply your user data to a board, but it will be fully encrypted and unreadable. Only you are able to provide the unencrypted data.

Q: How is this different from turning ChatGPT’s ‘history off’?

‘History off’ is a policy promise without a way to verify. Maple’s encryption is a technical guarantee enforced by cryptography.

Q: Do Zero Data Retention policies satisfy confidentiality?

Technically other AI tools have gained HIPAA compliance with ZDR policies. ZDR is just a business promise. We built Maple to go one step further and use encryption so we don't even see the data to begin with.

Q: Can I delete my data and even my account?

Yes. Maple gives you control to delete individual chat sessions. You are also able to delete your entire user account. Both of these actions are final and result in the encrypted data being removed from our servers.

Conclusion: Embrace AI—Safely

AI assistance can give therapists their evenings back, improve documentation quality, and unlock new creative interventions. The barrier has always been confidentiality. With an end‑to‑end‑encrypted architecture, Maple removes that barrier, letting you harness cutting‑edge language models while honoring the sacred trust clients place in you.

Ready to see how much time—and stress—you can save?

→ Start chatting privately with Maple for free today.

Your clients’ secrets—and your peace of mind—are safe with encryption.

📣 Mental Health Awareness Month Bonus 🎁

Sign up for a Maple Starter or Pro plan using your clinic or practice email address anytime in May and get an extra month—free!

How to claim:

- Create (or upgrade to) a Starter or Pro subscription using your professional email.

- In the Maple app, tap Account → Contact Us and send a quick note with the subject line “Free Month”.

- We’ll verify and credit your account with an additional month.

Because private, encrypted AI should be as affordable as it is secure. 💚